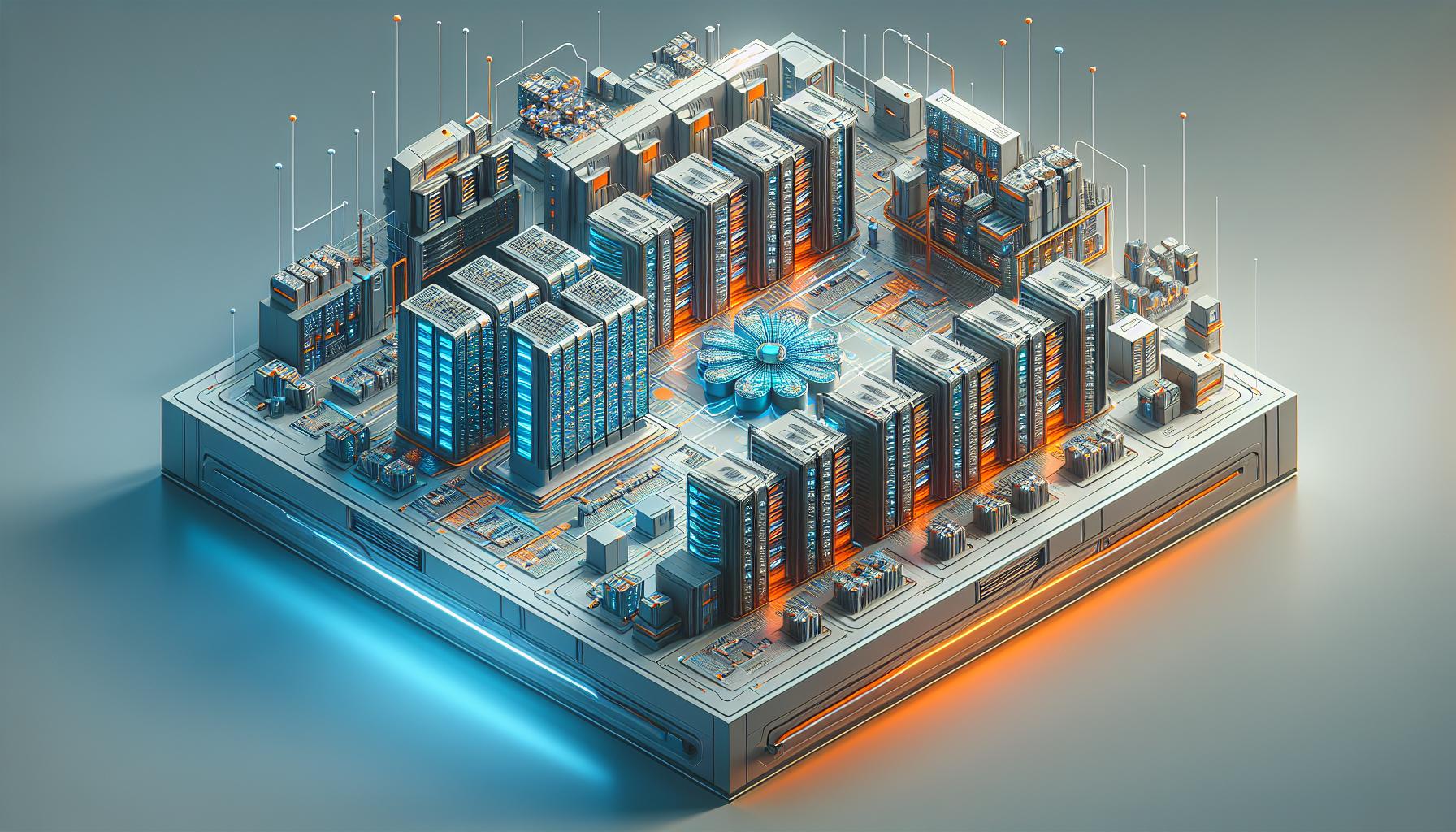

The rapid advancement of Generative AI is significantly transforming data center infrastructure. Organizations are urged to update their technological strategies to meet the increasing computational demands. The development in this area, driven by large language models and deep learning, is disrupting the traditional dominance of x86 CPUs in data centers. This trend is encouraging a move toward specialized accelerators such as GPUs, TPUs, and ARM-based processors, which provide better efficiency for AI-focused tasks.

In this evolving landscape, Data Processing Units (DPUs) are gaining popularity for optimizing diverse computing environments. They are effective in managing network and storage tasks, thereby freeing CPUs to concentrate on intensive AI and machine learning processes. There is also a resurgence in hyperconverged infrastructure, acting as a crucial enabler for AI evolution by merging compute, storage, and networking into a single, cohesive system. This integration supports the flexible scaling required for robust AI operations.

The complex requirements of generative AI are also creating a surge in the need for multicloud networking. As companies juggle hybrid setups that blend on-premises data centers, public clouds, and edge technologies, integrated networking solutions are crucial for seamless data and application transitions across various platforms.

As AI technology rapidly advances, data centers are facing intensified pressure to adapt. They are focusing on hardware and networking breakthroughs to support new AI models that necessitate quicker response times and more efficient energy consumption. This shift marks a pivotal moment for the telecom, data, AI, and hardware industries as they prepare for the challenges presented by the evolution of generative AI.

For more detailed insights, you can read the entire article on the source.