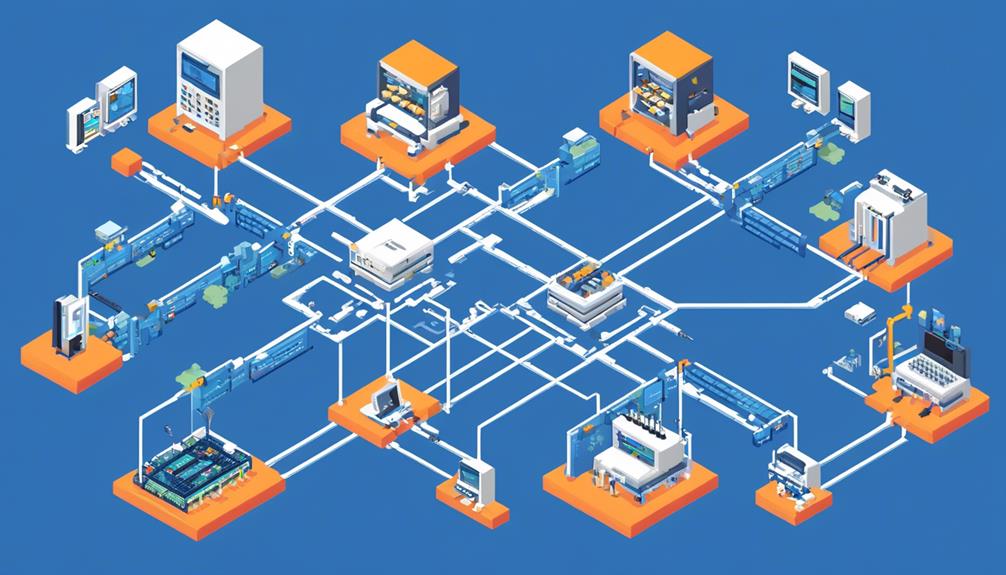

Cloud networking and load balancing hardware have become indispensable in today's digital landscape. Businesses strive to deliver seamless user experiences and handle high volumes of web traffic. The efficient distribution of network load is essential for maintaining optimal performance and preventing server failures.

Traditionally, load balancing hardware, such as hardware load balancers, has been used to achieve this. However, with the rise of cloud computing, a more agile and cost-effective solution has emerged – cloud load balancing.

Cloud load balancing offers several benefits. It allows businesses to distribute incoming network traffic across multiple servers or resources in different locations, ensuring that no single server becomes overwhelmed. This ensures high availability and reliability for applications and services.

Additionally, cloud load balancing offers scalability. As web traffic fluctuates, cloud load balancers can automatically adjust resources to handle increased demand. This flexibility allows businesses to scale their infrastructure up or down as needed, without the need for manual intervention.

There are different types of load balancing hardware available. Traditional hardware load balancers are physical devices that sit between the client and the server. They distribute traffic based on criteria such as round-robin, least connections, or IP affinity.

On the other hand, cloud load balancing is a software-based solution provided by cloud service providers. It leverages the scalability and flexibility of the cloud to distribute traffic across virtual servers or resources. This type of load balancing is highly scalable and can handle large volumes of traffic.

In recent years, the field of cloud networking and load balancing hardware has seen several trends. One such trend is the shift towards software-defined networking (SDN). SDN allows businesses to manage and control their network infrastructure through software, providing greater flexibility and agility.

Another trend is the adoption of containerization and microservices architecture. This approach allows applications to be broken down into smaller, independent components that can be easily deployed and scaled. Load balancing plays a crucial role in distributing traffic across these components and ensuring optimal performance.

In conclusion, understanding the intricacies of cloud networking and load balancing hardware is crucial for businesses in today's interconnected world. Cloud load balancing offers benefits such as high availability, scalability, and flexibility. By staying updated on the latest trends and technologies in this field, businesses can ensure their infrastructure is equipped to handle the demands of the digital landscape.

Key Takeaways

- Load balancing ensures equitable distribution of web traffic across multiple network servers, optimizing network performance and enhancing user experience.

- Cloud-based load balancers offer global server load balancing for distributed environments, ensuring high availability and fault tolerance.

- Cloud networking provides scalability advantages, allowing businesses to easily scale resources up or down, and offers cost savings by paying for resources used and avoiding upfront hardware expenses.

- Load balancing hardware is a critical component of network infrastructure in cloud computing environments, improving performance, preventing server overload, and providing reliability and fault tolerance.

Load Balancing Basics

Load balancing is an essential technique used in networking to ensure the equitable distribution of web traffic across multiple network servers. It plays a crucial role in optimizing network performance and enhancing the user experience. Load balancing can be based on factors such as available server connections, processing power, and resource utilization. The primary goal of load balancing is scalability, enabling the system to handle varying traffic loads and maintain site accessibility.

Different routing techniques and algorithms are employed to achieve optimal performance in load balancing scenarios. Cloud-based load balancers offer global server load balancing and are suitable for highly distributed environments, such as those found in cloud computing. These load balancers distribute network traffic across multiple data centers and provide high availability and fault tolerance.

On the other hand, hardware-based load balancing is more suitable for single data center scenarios. These load balancers are physical devices that sit between the network servers and the clients, efficiently distributing network traffic among the servers. They typically offer advanced features, such as SSL termination and application layer awareness, which further enhance performance and security.

Load balancing in the cloud brings additional benefits, such as scalability on-demand, automatic failover, and centralized management. Cloud load balancers can dynamically scale up or down based on the traffic load, ensuring optimal resource utilization and cost efficiency. They also provide flexibility in deploying and managing applications across multiple cloud instances.

Benefits of Cloud Networking

Cloud networking offers several benefits, including scalability advantages, cost savings, and improved performance.

With cloud networking, businesses can easily scale their resources up or down to meet changing demands without the need for additional hardware purchases. This scalability also allows for cost savings, as businesses only pay for the resources they actually use.

Additionally, cloud networking can improve performance by leveraging the flexibility and scalability of the cloud for distributed workloads.

Scalability Advantages

Achieving scalability and cost savings, cloud networking offers numerous benefits for organizations seeking to optimize their infrastructure.

When it comes to load balancing in the cloud, there are several advantages to consider:

- On-Demand Scaling: Cloud load balancers allow for easy scaling without the need for additional hardware purchases. This means organizations can quickly and efficiently handle increased traffic without investing in costly hardware load balancers.

- High Availability: Cloud load balancing provides responsive rerouting in failover scenarios, ensuring continuous availability without the need for additional hardware investments. This helps organizations maintain a scalable service system while minimizing downtime.

- Cost Savings: By leveraging cloud load balancers, organizations can avoid the higher costs associated with purchasing and maintaining hardware load balancers for scalability. This allows for more efficient use of resources and cost savings for cloud service providers.

Cost Savings

Cost savings are a significant advantage of cloud networking. One way this is achieved is by eliminating upfront hardware expenses and scaling resources on-demand. Cloud load balancing specifically helps businesses avoid the high initial investment and ongoing maintenance costs associated with hardware load balancers. The pay-as-you-go model of cloud load balancing can result in significant cost savings, especially for organizations with fluctuating traffic patterns.

By dynamically distributing traffic across multiple servers, cloud load balancing eliminates the need to overprovision hardware. This leads to optimized resource utilization and reduced operational costs. Leveraging cloud load balancing can therefore lead to cost savings by avoiding the need for additional hardware purchases and by utilizing the scalability and elasticity of cloud resources.

Improved Performance

With the elimination of upfront hardware expenses and the ability to scale resources on-demand, cloud networking offers businesses not only cost savings but also improved performance.

Here are three ways cloud networking enhances performance:

- Load Balancing: Cloud load balancing distributes workloads across computing resources, optimizing response times and reducing latency. This ensures high performance levels and increased throughput.

- Scalability: Cloud load balancing leverages scalability and agility, allowing businesses to handle increased traffic and workload demands. Automatic scaling and failover features contribute to improved overall availability.

- Provider Features: Cloud load balancing services provided by AWS, Google Cloud, Azure, and Rackspace offer flexibility, scalability, and improved performance compared to hardware-based load balancing. These services also provide integrated certificate management, further enhancing performance and security.

Importance of Load Balancing Hardware

Load balancing hardware is essential for ensuring optimal distribution of web traffic across multiple servers, improving performance and preventing server overload. With the increasing demand for high availability and scalability in cloud computing environments, load balancing has become a critical component of network infrastructure.

A hardware load balancer acts as a traffic manager, distributing incoming requests across multiple servers based on predefined algorithms and routing techniques. This ensures that no single server becomes overwhelmed with traffic, leading to improved response times and reduced downtime. By evenly distributing the workload, load balancing hardware allows for efficient resource utilization and the ability to handle sudden traffic surges.

To further illustrate the importance of load balancing hardware, consider the following table:

| Benefits of Load Balancing Hardware | Examples |

|---|---|

| Improved performance | Reduced response times and increased throughput |

| Scalability | Efficient resource utilization and the ability to handle traffic surges |

| Reliability and fault tolerance | Reduced risk of site inaccessibility due to server failures |

| Flexible routing techniques | Randomized routing, factors-based routing |

| Cloud load balancing | Suitable for highly distributed environments and offers global server load balancing |

Load balancing hardware plays a crucial role in maintaining the stability and performance of web applications, especially in on-premises data centers. However, in highly distributed cloud environments, cloud load balancing solutions are more suitable and offer global server load balancing for improved availability.

Understanding Cloud Computing Infrastructure

Understanding the infrastructure of cloud computing involves gaining a comprehensive understanding of hardware load balancers and their role in distributing web traffic across multiple network servers. Load balancing is a crucial aspect of cloud computing infrastructure as it ensures optimal performance, scalability, and availability. Here are three key points to consider:

- Scalability: Hardware load balancers play a vital role in scaling cloud computing infrastructure. By distributing incoming web traffic across multiple servers, they prevent the overloading of a single server, ensuring that the workload is evenly distributed. This scalability is essential for handling increasing traffic demands and maintaining service availability.

- Optimal Load Distribution: Load balancing devices, whether hardware or cloud-based, employ various routing techniques and algorithms to achieve optimal load distribution. These techniques may include randomized routing, where traffic is distributed randomly across servers, or routing based on factors like server processing power and resource utilization. The goal is to evenly distribute the workload and prevent any single server from becoming overwhelmed.

- Cloud Load Balancer vs. Hardware Load Balancing Device: While both cloud load balancers and hardware load balancing devices serve the same purpose, there are some key differences. Cloud load balancers offer global server load balancing and are suitable for highly distributed environments. They provide scalability, flexibility, and cost-effectiveness. On the other hand, hardware load balancing devices have higher upfront costs and lack the same level of scalability compared to load balancing as a service (LBaaS) subscriptions in the cloud. However, they are still widely used in certain scenarios where specific hardware features or configurations are required.

Understanding the cloud computing infrastructure involves recognizing the role of load balancing devices, such as hardware load balancers, in distributing web traffic across multiple servers. These devices ensure scalability, optimal load distribution, and play a crucial role in maintaining the availability and performance of cloud-based applications and services.

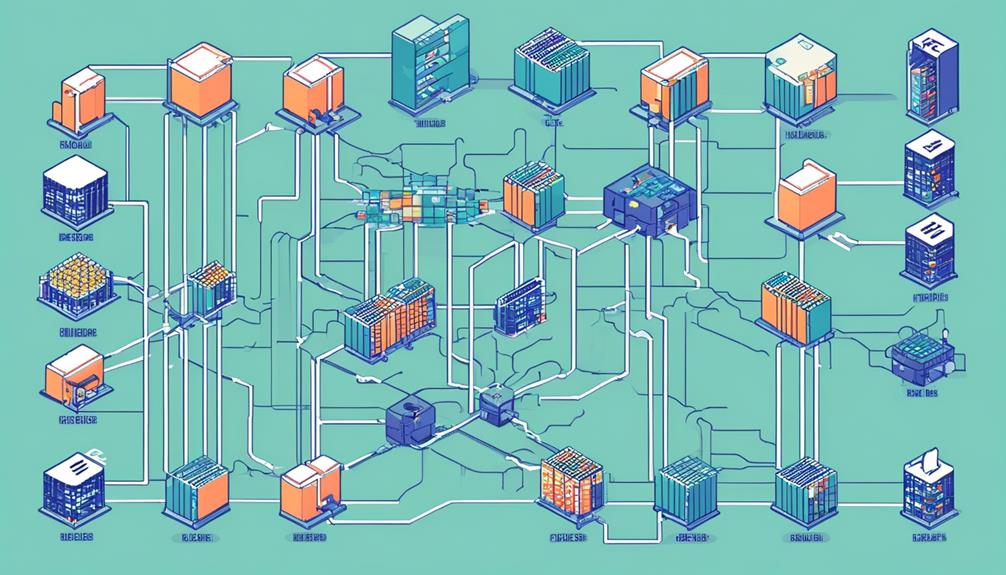

Types of Load Balancing Hardware

There are various types of load balancing hardware available for distributing web traffic across multiple network servers. These hardware devices play a crucial role in ensuring the efficient distribution of workload in cloud computing environments. Hardware load balancers use randomized or factor-based routing techniques to evenly distribute traffic among the available servers.

Scalability is a primary goal of load balancing hardware as it helps reduce site inaccessibility due to server failures. Different routing techniques and algorithms are employed to ensure optimal performance in various load balancing scenarios. These hardware devices are designed to handle high volumes of traffic and provide failover capabilities, ensuring uninterrupted service.

However, it's important to note that hardware load balancers have predefined limitations in terms of scalability. In contrast, cloud load balancers offer global server load balancing and scalability, making them more suitable for modern cloud computing environments. Cloud load balancers are agile and offer responsive rerouting, allowing for efficient load distribution across virtual machines.

While hardware load balancers are compatible with load balancing hardware, cloud load balancers provide greater flexibility and adaptability in dynamic cloud environments. They can handle changing traffic patterns and adjust routing accordingly, ensuring optimal performance and resource utilization.

Network Layer Load Distribution Techniques

Network layer load distribution techniques play a crucial role in optimizing performance and ensuring high availability in cloud networking and load balancing.

Round Robin Load Balancing evenly distributes traffic across multiple servers, while the Least Connections Algorithm routes traffic to the server with the fewest active connections.

IP Hash Load Distribution, on the other hand, uses source IP addresses to determine server selection.

These techniques provide efficient load distribution and contribute to the scalability and reliability of network infrastructure.

Round Robin Load Balancing

Round robin load balancing is a widely used technique in network layer load distribution for evenly distributing incoming connections among servers. It operates by sequentially routing each new request to the next available server in a circular order. This technique ensures fairness and prevents any single server from being overwhelmed by requests.

Round robin load balancing is suitable for scenarios where all servers have similar capabilities and resources. It is commonly used in both hardware load balancers and cloud-based load balancers to achieve optimal performance in distributing network traffic.

In cloud computing environments, round robin load balancing can be implemented using a network load balancer, which evenly distributes traffic across multiple servers. Additionally, it can also be utilized in global server load balancing to distribute network traffic across geographically dispersed servers.

Least Connections Algorithm

The Least Connections algorithm is a highly effective network layer load distribution technique that ensures even distribution of load by sending traffic to the server with the fewest number of active connections.

It is a method used by load balancers, both hardware and cloud-based, to optimize server utilization and prevent overloading.

By monitoring real-time server loads and routing traffic to the server with the fewest active sessions, the algorithm enhances the overall performance of the network.

This approach is crucial in cloud networking, where efficient resource utilization and optimal user experience are paramount.

The Least Connections algorithm is a reliable tool in load balancing, as it dynamically adjusts the distribution of traffic to maintain equilibrium across the server pool, resulting in improved network performance and user satisfaction.

IP Hash Load Distribution

With the aim of maintaining session persistence and enabling consistent server selection for each client, IP Hash Load Distribution is a network layer load distribution technique that utilizes the source or destination IP address to determine the appropriate server for handling requests.

This technique ensures that requests from the same source IP address are always directed to the same server, thereby maintaining session persistence. IP Hash Load Distribution offers consistent server selection for each client, enabling better caching and session management.

It can be particularly useful in scenarios where maintaining the client's session on a specific server is crucial, such as in e-commerce or banking applications. However, it may not be ideal for scenarios requiring dynamic load balancing, where traffic needs to be distributed based on server load or availability.

Application Layer Load Distribution Methods

Application Layer Load Distribution Methods focus on efficiently distributing web traffic across multiple network servers at the application layer level. These methods utilize routing algorithms such as round-robin, least connections, and least pending requests to distribute traffic based on factors like server connections and processing power. The primary goal of application layer load distribution is scalability, reducing the impact of server failures and ensuring optimal performance in varying load balancing scenarios.

To better understand these methods, let's take a look at a comparison table:

| Routing Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Round-Robin | Distributes incoming requests equally among servers in a rotation. | Easy to implement, ensures balanced workloads. | Does not account for server capacity or traffic. |

| Least Connections | Directs requests to the server with the fewest active connections. | Efficiently distributes workload, prevents overloading. | May not consider server processing power or response time. |

| Least Pending Requests | Routes requests to the server with the fewest pending requests. | Balances workload based on server responsiveness. | May not consider server capacity or traffic.

Cloud-based load balancers offer more agile distribution compared to hardware load balancers, as they can manage all routing and ensure responsive rerouting in failover and disaster recovery scenarios. Additionally, application layer load distribution methods can be applied across multiple data centers, enabling workload distribution across geographic locations to meet varying workload demands.

Factors to Consider When Choosing Load Balancers

When selecting load balancers, there are several key factors to consider in order to ensure scalability, optimal load distribution, and efficient routing techniques and algorithms. These factors play a crucial role in achieving high performance and availability for application servers in cloud computing environments.

Here are three important factors to consider:

- Scalability: Load balancers should have the ability to scale horizontally to accommodate increasing traffic demands. Cloud load balancers offer superior scalability compared to hardware load balancers, as they can dynamically add or remove servers based on real-time traffic patterns. This ensures that application servers can handle a growing number of requests without impacting performance.

- Optimal Load Distribution: Load balancers should evenly distribute incoming network traffic across multiple application servers. This can be achieved through various distribution algorithms such as round-robin, least connections, and network layer load distribution. It is important to select a load balancer that supports these algorithms and allows for fine-tuning to achieve optimal load distribution based on the specific requirements of the application.

- Routing Techniques and Algorithms: Load balancers should employ efficient routing techniques and algorithms to direct traffic to the most suitable application server. This includes considering factors like server health, proximity, and capacity. Cloud load balancers often offer more advanced routing techniques, such as global server load balancing, which allows for intelligent routing based on geolocation or latency, resulting in improved performance and user experience.

Scalability and Compatibility Considerations

Scalability is a critical factor in load balancing as it ensures the ability to handle increasing traffic and server demands effectively. However, hardware load balancers may face challenges in scalability due to predefined limitations.

Compatibility considerations are also important to ensure seamless integration with existing infrastructure and cloud services.

Scalability Challenges

In considering the challenges of scalability and compatibility, it is evident that cloud load balancers offer superior flexibility and adaptability compared to their hardware counterparts.

Here are three key scalability challenges that organizations face when using hardware load balancers:

- Limited scalability: Hardware load balancers have predefined limitations in terms of the number of servers they can handle. When organizations need to scale their infrastructure to meet increased demand, they often have to invest in additional appliances, which can be time-consuming and costly.

- Lack of compatibility with cloud servers: Hardware load balancers are typically designed to work with other appliances and are not compatible with cloud servers. This restricts organizations from efficiently distributing the load across multiple servers in a cloud environment.

- Scalability and availability limitations: Hardware load balancers may struggle to scale and maintain high availability during peak times. As the number of requests increases, hardware load balancers may become overwhelmed, leading to performance issues and potential downtime.

In contrast, cloud load balancers offer on-demand scalability, compatibility with both load balancing hardware and cloud servers, and the ability to handle high traffic loads efficiently. These advantages make cloud load balancers the preferred choice for organizations seeking scalable and highly available load balancing solutions.

Compatibility Issues

Compatibility is a crucial aspect to consider when evaluating scalability and compatibility issues in load balancing solutions.

Cloud load balancers offer more compatibility compared to load balancing hardware. While cloud load balancers can work with both cloud servers and load balancer devices, hardware load balancers are only compatible with other appliances and cannot distribute load to cloud servers. This limitation requires additional hardware purchases for scalability, costing time and money.

In contrast, cloud load balancers can scale on demand without extra cost. Compatibility issues include the ability of load balancers to work with various hardware and cloud environments.

Therefore, when considering load balancing solutions, it is important to assess the compatibility of the load balancer with the desired infrastructure, with cloud load balancers offering a more versatile and scalable option compared to hardware load balancers.

Single Data Center Load Balancing

Single Data Center Load Balancing involves the distribution of web traffic across servers within a single data center, ensuring efficient performance and high availability. This process is essential for organizations that operate from a single data center and rely on multiple application servers to handle incoming requests. To achieve load balancing in a single data center environment, organizations typically utilize a software load balancer that intelligently distributes traffic among the available servers.

Here are three key aspects of single data center load balancing:

- Routing Methods: Load balancing in a single data center can be achieved through various routing methods. Round-robin routing evenly distributes requests across servers, ensuring each server handles an equal share of the traffic. Alternatively, load balancing can be performed based on server connections and resource utilization, diverting traffic to servers with lower loads. These methods ensure optimal resource utilization and prevent any single server from becoming overwhelmed.

- Scalability: The primary goal of single data center load balancing is to handle varying traffic loads efficiently. By distributing traffic across multiple servers, organizations can scale their applications to handle increased demand without compromising performance. This scalability ensures that even during peak periods, the system remains responsive and prevents any single server from becoming a bottleneck.

- High Availability: Load balancing in a single data center environment also provides high availability. By distributing traffic across multiple servers, the system can continue to function even if a single server fails. The load balancer detects the failure and redirects traffic to the remaining servers, minimizing downtime and ensuring uninterrupted service for end-users.

Cross Data Center Load Balancing

Cross Data Center Load Balancing is a critical component of cloud networking and load balancing hardware. It enables businesses with a global presence or distributed infrastructure to efficiently distribute traffic across multiple data centers, ensuring high availability and optimal performance.

Multi-Dc Load Balancing

Multi-DC load balancing, also known as Cross Data Center Load Balancing, is a crucial technique for distributing web traffic across multiple data centers. It ensures high availability and efficient utilization of resources by evenly distributing the incoming traffic among different application servers in different data centers.

Here are three key points to understand about multi-DC load balancing:

- Improved Performance: Multi-DC load balancing optimizes the use of resources by distributing the traffic across multiple data centers. This improves response times and ensures that users experience minimal delays or downtime.

- High Availability: By distributing the load across multiple data centers, multi-DC load balancing enhances the resilience of the system. If one data center goes down, traffic can be automatically redirected to another data center, ensuring uninterrupted service.

- Scalability: Multi-DC load balancing allows for easy scalability as traffic demands increase. Additional data centers can be added to the load balancing setup, providing the flexibility to handle growing traffic loads effectively.

Global Traffic Distribution

Efficient distribution of web traffic across multiple data centers is achieved through Global Traffic Distribution, also known as Cross Data Center Load Balancing. This technique optimizes performance and reduces site inaccessibility by distributing incoming traffic across application servers in different data centers.

To achieve optimal load distribution, various routing techniques and algorithms are utilized. Without centralized Global Server Load Balancing (GSLB) appliances, cross data center setups rely on Time-To-Live (TTL), resulting in uneven distribution and delays in routing changes.

Cloud-based load balancers offer more agility, scalability, and compatibility compared to hardware load balancers, as they eliminate the need for additional purchases and maintenance for cross data center load balancing.

Maintenance Costs of Load Balancing Hardware

Maintenance costs for load balancing hardware encompass a range of regular hardware maintenance activities, including firmware updates, hardware component replacements, and system upgrades. These costs are an essential aspect of ensuring the efficient and effective operation of the load balancer.

Here are three key points to consider regarding the maintenance costs of load balancing hardware:

- Ongoing monitoring and support services: Load balancers play a crucial role in distributing incoming network traffic across multiple application servers. To ensure optimal performance, ongoing monitoring and support services are necessary. This includes regular check-ups, performance monitoring, and troubleshooting to address any issues that may arise.

- Specialized technical expertise: Maintaining load balancing hardware may require specialized technical expertise. This expertise is necessary for tasks such as firmware updates, component replacements, and system upgrades. Having access to skilled professionals who can handle these tasks efficiently can help reduce downtime and ensure the load balancer operates smoothly.

- Additional costs: Maintenance costs for load balancing hardware may also include expenses related to warranty renewals, licensing, and support contracts. These additional costs are necessary to ensure access to the latest updates, patches, and technical support, which are vital for the continued functionality and security of the load balancer.

Distribution Algorithms Used in Load Balancers

Load balancers employ various distribution algorithms, such as round-robin and least connections, to efficiently distribute incoming network traffic across multiple servers while minimizing the impact of server failures. These algorithms play a crucial role in load balancing, ensuring that application servers handle requests effectively and evenly.

Round-robin is a commonly used distribution algorithm where each new connection is assigned to the next available server in a cyclical manner. This approach evenly distributes the load across servers, preventing any single server from becoming overwhelmed. However, it does not consider the current load or capacity of each server, which may lead to imbalanced distribution in certain scenarios.

To address this limitation, the least connections algorithm is often employed. This algorithm directs new connections to the server with the fewest active connections at any given time. By doing so, it ensures that the load is evenly distributed based on the actual workload of each server. This approach is particularly effective in situations where server capacities differ significantly.

Additionally, some load balancers use more advanced algorithms, such as least pending requests. This algorithm considers not only the number of active connections but also the number of pending requests on each server. By taking into account the pending requests, the load balancer can make more informed decisions in distributing traffic and further optimize the overall performance.

It is worth noting that the choice of distribution algorithm depends on the specific requirements of the application and the characteristics of the servers involved. Both hardware and cloud load balancers support a range of distribution algorithms, allowing for flexibility and adaptability in different load balancing scenarios.

Cloud load balancers, in particular, offer advantages in terms of scalability, compatibility, and agile distribution compared to traditional hardware load balancers.

TTL Reliance and Its Impact on Load Balancing

TTL reliance in load balancing can have significant impacts on distribution efficiency and agility. When load balancers rely on Time-to-Live (TTL) values, there are several consequences that can affect the performance and availability of application servers. Here are three key points to consider:

- Delayed routing changes: TTL reliance can lead to uneven and non-agile distribution, especially in cross-data center setups without centralized Global Server Load Balancing (GSLB) appliances. With TTL-based load balancing, routing changes may take minutes to propagate, causing delays in redirecting traffic to healthy servers. This delay can result in decreased service availability and potential disruptions for end users.

- Caching refresh delays: When TTL-based load balancers make routing changes, ISPs and client devices often cache DNS records according to the TTL value. As a result, struggling servers may continue to receive traffic until the TTL expires and caches are refreshed. This can encumber overloaded servers and hamper overall load balancing efficiency.

- Cloud-based load balancers as a solution: Cloud-based load balancers offer a more agile and responsive alternative to TTL reliance. With these solutions, load balancing is managed within the cloud infrastructure, eliminating DNS-related delays. In failover and disaster recovery scenarios, cloud-based load balancers can ensure responsive rerouting, minimizing service disruptions and maximizing service availability.

Latest Trends in Load Balancing Hardware

In the realm of load balancing, the latest trends in hardware focus on optimizing performance and distributing traffic according to customized rules in on-premises data centers. Load balancers play a crucial role in efficiently distributing incoming requests across multiple application servers, ensuring high availability and scalability.

To highlight the latest trends in load balancing hardware, let's take a look at the following table:

| Trend | Description | Benefits |

|---|---|---|

| Customized Rule-based Load Balancing | Load balancers now allow administrators to define custom rules for traffic distribution. This enables fine-grained control over load balancing decisions based on factors like user location, URL path, or specific application requirements. | Increased flexibility and optimized resource utilization |

| SSL/TLS Offloading | Load balancers are equipped with SSL/TLS termination capabilities, allowing them to handle the encryption and decryption of traffic. This offloads the CPU-intensive task from application servers, improving their performance and scalability. | Enhanced security, reduced server load, and improved response times |

| Application-aware Load Balancing | Modern load balancers can intelligently analyze application-layer data to make informed traffic distribution decisions. By understanding the specific requirements and behavior of applications, load balancers can optimize performance, ensure session persistence, and improve overall user experience. | Improved application performance and user satisfaction, enhanced scalability |

These trends highlight the evolving nature of load balancing hardware, with a focus on customization, performance optimization, and intelligent decision-making. While hardware load balancers continue to play a crucial role in on-premises data centers, cloud networking and load balancing solutions offer additional benefits in terms of scalability, flexibility, and cost-effectiveness. As organizations embrace cloud-based infrastructure, it becomes essential to consider both hardware and cloud load balancers to meet the unique needs of their applications and workloads.

Frequently Asked Questions

What Hardware Is Used for Load Balancing?

When it comes to load balancing, hardware plays a crucial role in ensuring efficient distribution of web traffic.

Load balancing hardware, such as physical appliances, is used to achieve scalability and reliability in network environments. These devices implement various load balancing algorithms to optimize server utilization and improve overall performance.

Popular hardware load balancing solutions include products from vendors like F5 Networks, Citrix Systems, and A10 Networks. These solutions offer robust hardware requirements, precise load balancing algorithms, and numerous benefits in terms of scalability and fault tolerance.

What Is the Load Balancing in Cloud Computing?

Load balancing in cloud computing refers to the distribution of web traffic across multiple network servers for improved performance and availability.

It encompasses both application level and network level load balancing techniques, which utilize various load balancing algorithms.

These algorithms consider factors such as server connections, processing power, and resource utilization to effectively distribute traffic.

The benefits of load balancing in cloud computing include enhanced scalability, responsiveness in failover and disaster recovery scenarios, and elimination of DNS-related delays.

What Is Hardware Defined Load Balancing?

Hardware defined load balancing (HDLB) refers to the use of physical appliances to distribute web traffic across multiple network servers. It offers benefits such as scalability and reduced site inaccessibility caused by server failure.

HDLB implementation involves routing techniques and algorithms that ensure optimal performance. However, there are challenges associated with HDLB, including predefined limitations and the need for additional hardware purchases for scalability.

Despite its advantages, HDLB is not compatible with cloud servers.

What Are the 3 Types of Load Balancers in Aws?

There are three types of load balancers in AWS: Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer (CLB).

Each type caters to different networking and application requirements.

ALB operates at Layer 7 of the OSI model, making it ideal for routing HTTP and HTTPS traffic.

NLB operates at Layer 4, distributing TCP and UDP traffic efficiently.

CLB is a traditional load balancer that supports both HTTP and HTTPS protocols.

These load balancers provide various load balancing algorithms and techniques for efficient cloud load balancing.