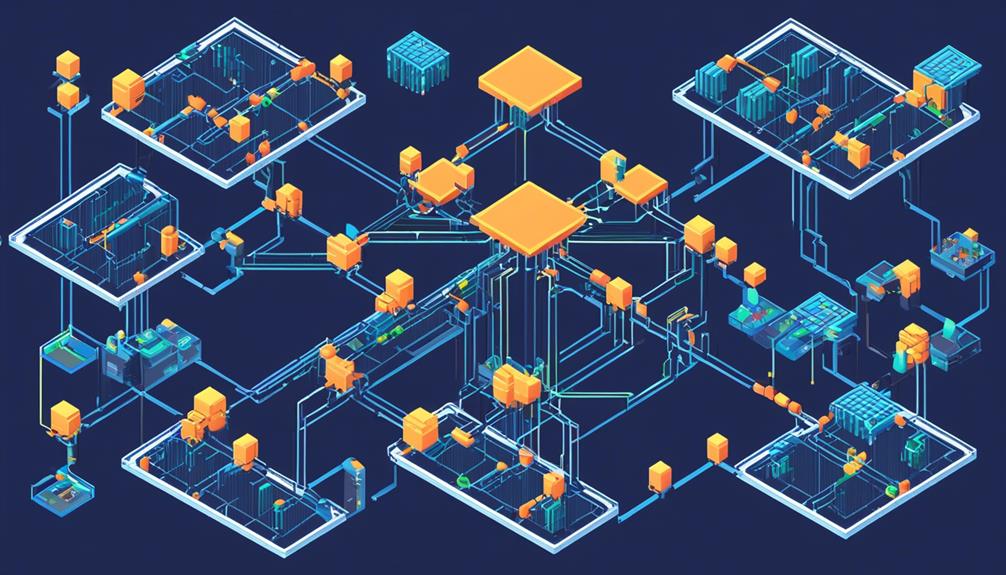

Load balancers play a critical role in network architecture by efficiently distributing network or application traffic across multiple servers. With the ability to optimize resource utilization, prevent server overloads, and conduct health checks, load balancers ensure the smooth functioning of networks and applications.

Moreover, they offer an indispensable layer of security by mitigating DDoS attacks and providing cost-effective protection.

However, to fully grasp the intricacies of load balancers, one must delve into their history, explore their role in SSL encryption, examine the different algorithms they employ, and compare the benefits of software versus hardware load balancers.

Additionally, understanding the distinction between stateful and stateless load balancing, exploring specialized load balancers like application load balancers and load balancing routers, and delving into the realm of adaptive load balancing will provide a comprehensive overview of this essential component in network architecture.

Key Takeaways

- Load balancing evenly distributes incoming requests across multiple servers or resources, improving application reliability and availability.

- Common load balancing algorithms include round-robin, least connections, and weighted round-robin, which efficiently allocate resources based on factors like server capacity and traffic load.

- SSL offloading at the load balancer enhances application performance and security by reducing processing load on web servers and accelerating the SSL handshake process.

- Software load balancers offer flexibility and scalability, while hardware load balancers provide fast throughput and better security. DNS load balancing may introduce latency due to DNS resolution, while hardware load balancing offers faster response times.

History of Load Balancing

In the 1990s, load balancing emerged as a crucial component of network architecture, utilizing hardware appliances to distribute traffic across a network. Load balancing is the process of evenly distributing incoming requests across multiple servers or resources to optimize performance and prevent any single resource from becoming overwhelmed. Load balancing ensures that no single server or resource is overloaded, thereby improving the overall reliability and availability of applications.

Load balancing algorithms are employed to determine how incoming requests should be distributed across the network. These algorithms take into account factors such as server capacity, traffic load, and responsiveness to efficiently allocate resources. Some commonly used load balancing algorithms include round-robin, least connection, and weighted round-robin.

Load balancing has become even more critical with the increasing complexity of applications, higher user demand, and rising traffic volume. As applications have become more sophisticated, they require more resources to function optimally. Load balancing ensures that these resources are efficiently utilized, resulting in improved application performance and user experience.

Traditionally, load balancing was achieved through hardware appliances that were responsible for distributing traffic across the network. However, with advancements in technology, load balancing has evolved to include software-native load balancers and virtual appliances. These solutions offer greater flexibility and scalability, allowing organizations to adapt to changing network demands.

Load Balancing and SSL

Load balancing and SSL are closely intertwined in network architecture.

One of the key benefits of SSL offloading is the reduction in decryption overhead on web servers, leading to improved application performance.

Load balancer SSL certificates are essential for establishing secure connections between clients and the load balancer, ensuring the confidentiality and integrity of data.

Additionally, SSL session persistence is a critical feature that enables the load balancer to maintain SSL session information, allowing for a seamless user experience during subsequent requests.

SSL Offloading Benefits

SSL offloading at the load balancer provides significant benefits to network architecture, enhancing application performance, optimizing traffic distribution, and improving overall security.

By offloading SSL/TLS encryption and decryption from the web servers, load balancers can reduce the processing load on the servers, allowing them to focus on handling other tasks. This improves the overall performance of the applications by accelerating the SSL handshake process and reducing server processing burden.

Additionally, SSL offloading simplifies certificate management, as the load balancer can handle SSL termination and certificate handling. This enhances the security posture of the network by ensuring proper SSL encryption and reducing the risk of certificate mismanagement.

Furthermore, load balancers with SSL offloading capabilities can efficiently handle and distribute encrypted traffic, improving the security and performance of applications.

Load Balancer SSL Certificates

By implementing load balancer SSL certificates, organizations can ensure secure communication between clients and the load balancer, further enhancing the overall security and performance of their network architecture. Load balancers play a crucial role in distributing application traffic across multiple available servers, ensuring efficient load balancing.

SSL certificates enable the encryption of data transmitted between clients and backend servers, protecting sensitive information from unauthorized access. Load balancers can act as application delivery controllers (ADCs) and manage SSL certificates for multiple backend servers, simplifying the certificate management process.

SSL termination at the load balancer improves application performance by offloading the decryption process from the web servers. Alternatively, SSL pass-through can be used to pass encrypted requests to web servers, enhancing security but potentially increasing the CPU load on the servers.

Load balancer SSL certificates are essential for securing and encrypting traffic in network architectures.

SSL Session Persistence

SSL Session Persistence ensures the continuity and consistent secure connections for users accessing applications that require continuity, such as online banking or e-commerce websites. This feature is achieved by load balancers in network architecture by keeping track of the SSL session and directing subsequent requests from the same user to the same server.

Here are some key points about SSL Session Persistence:

- Prevents disruption: SSL Session Persistence prevents disruption to the SSL session caused by load balancing algorithms that distribute traffic across multiple servers.

- Consistent experience: By maintaining SSL session persistence, users experience consistent and uninterrupted secure connections to the application servers.

- High availability: SSL Session Persistence contributes to high availability by ensuring that users can seamlessly access their applications without interruptions.

- Server maintenance: During server maintenance or updates, SSL Session Persistence ensures that users are not abruptly disconnected from their sessions.

- Traffic distribution: Load balancers distribute traffic evenly across servers, but SSL Session Persistence ensures that users' sessions remain connected to the same server for the duration of their session.

Load Balancing and Security

Load balancing plays a vital role in enhancing network security by effectively mitigating Distributed Denial of Service (DDoS) attacks and reducing the burden on corporate servers. DDoS attacks can overwhelm servers with an enormous amount of traffic, making them inaccessible to legitimate users. By distributing the traffic across multiple servers in a server pool, load balancers can effectively handle the increased load and prevent servers from becoming overwhelmed. This not only ensures the availability of services but also protects against the potential damage caused by DDoS attacks.

In addition to mitigating DDoS attacks, load balancers also improve security by off-loading the traffic to public cloud providers. By utilizing cloud environments, organizations can leverage the scalability and robustness of cloud infrastructure to handle the increased traffic and provide better protection against attacks. This approach eliminates the need for expensive and complex hardware defense mechanisms, which can be costly to maintain.

To provide a clearer understanding of the role of load balancing in enhancing security, the table below highlights the key benefits:

| Benefits | Description |

|---|---|

| DDoS Mitigation | Distributes traffic across multiple servers, preventing them from becoming overwhelmed by DDoS attacks. |

| Cloud Offload | Off-loads traffic to public cloud providers, leveraging their scalability and robustness for better protection against attacks. |

| Cost-Effectiveness | Eliminates the need for expensive hardware-based defense mechanisms, reducing costs and maintenance efforts. |

| SSL Termination | Improves application performance by saving web servers from decryption overhead. |

| Security Risks | When traffic between load balancers and web servers is not encrypted, SSL termination can expose the application to security risks. |

Load Balancing Algorithms

Load balancing algorithms play a critical role in optimizing network performance by efficiently distributing traffic across servers. Two commonly used algorithms are the Round Robin algorithm and the Least Connections algorithm.

The Round Robin algorithm evenly distributes incoming requests to each server in a sequential manner. This ensures that each server receives an equal share of the workload, preventing any single server from becoming overwhelmed with traffic.

On the other hand, the Least Connections algorithm directs traffic to the server with the fewest active connections. By distributing incoming requests to the server with the least load, this algorithm ensures that server resources are utilized effectively and prevents any one server from being overloaded.

Both of these algorithms enhance the overall performance of the network by effectively balancing the load on servers. By preventing overloads and evenly distributing traffic, these algorithms ensure that server resources are utilized efficiently, resulting in improved network performance.

Round Robin Algorithm

The Round Robin algorithm, a common load balancing method in network architecture, distributes traffic by rotating through a list of servers and sending each request to the next server in the sequence. This algorithm ensures an even distribution of requests among servers and can be a simple and effective load balancing technique. However, there are some limitations to consider:

- Round Robin algorithm doesn't consider server load or response time, which can lead to uneven server utilization.

- It is easy to implement and works well for environments with similar server capabilities and loads.

- Round Robin load balancing can be suitable for basic, non-critical applications or environments where simplicity is prioritized.

Despite its simplicity, the Round Robin algorithm may not be the best choice for complex or highly demanding applications that require more sophisticated load balancing techniques.

Least Connections Algorithm

How does the Least Connections Algorithm improve load balancing in network architecture? The Least Connections Algorithm is a dynamic load balancing algorithm that aims to evenly distribute incoming traffic among available servers based on the number of active connections. By continuously monitoring the number of active connections on each server, the algorithm directs new requests to the server with the fewest connections. This ensures that the workload is evenly distributed and helps prevent server overloads. The Least Connections Algorithm is particularly useful in high-traffic environments where servers may experience varying levels of load. By distributing the traffic based on the number of active connections, this algorithm helps maintain optimal server performance and improves the overall efficiency of the network.

| Advantages | Disadvantages |

|---|---|

| Ensures even distribution of workload | May not consider server capacity |

| Prevents server overloads | Requires continuous monitoring |

| Helps maintain optimal server performance | May not handle sudden spikes in traffic |

| Efficiently distributes incoming traffic | May not account for server location |

| Suitable for high-traffic environments | May not support session affinity |

The Least Connections Algorithm is an effective method for load balancing in network architecture as it ensures that the workload is evenly distributed among servers based on the number of active connections. However, it is important to consider its limitations, such as not considering server capacity, requiring continuous monitoring, not handling sudden spikes in traffic, not accounting for server location, and not supporting session affinity. Nonetheless, this algorithm plays a crucial role in optimizing server performance and improving the overall efficiency of the network.

Software Vs. Hardware Load Balancers

Software and hardware load balancers are two distinct options in network architecture, each offering unique advantages and considerations. When it comes to load balancing, organizations need to carefully consider their requirements and constraints before making a decision.

Here are some key points to consider when comparing software and hardware load balancers:

- Flexibility and Scalability: Software load balancers offer the flexibility to adjust to changing network needs and can scale beyond initial capacity by adding more software instances. This makes them a suitable choice for organizations that require dynamic load balancing and anticipate significant growth in network load.

- Performance and Security: Hardware load balancers provide fast throughput with specialized processors, enabling efficient traffic distribution. Additionally, they offer better security as they are handled only by the organization. This makes them a preferred choice for organizations that prioritize performance and security.

- Cloud-Based Load Balancing: Software load balancers can provide cloud-based load balancing with off-site options and an elastic network of servers. This allows organizations to leverage the scalability and flexibility of the cloud, making them an ideal choice for cloud-native applications and distributed environments.

- Cost Considerations: Hardware load balancers come with a fixed cost at the time of purchase but require extra staff and expertise for configuration. On the other hand, software load balancers may cause initial delay when scaling beyond capacity during configuration and don't come with a fixed upfront cost, leading to ongoing costs for upgrades. Organizations should carefully evaluate their budget and operational requirements when considering the costs associated with each option.

- Expertise and Support: Hardware load balancers often require specialized knowledge and expertise for configuration and maintenance, which can be a challenge for organizations without dedicated IT staff. Software load balancers, on the other hand, may offer more accessible support options and easier management through user-friendly interfaces.

DNS Load Balancing Vs. Hardware Load Balancing

DNS Load Balancing and Hardware Load Balancing are two approaches used in network architecture to distribute traffic across multiple servers.

DNS Load Balancing involves utilizing the DNS server to direct client requests to different IP addresses, thereby distributing the load across multiple servers based on the DNS response.

On the other hand, Hardware Load Balancing employs physical devices specifically designed for traffic distribution, ensuring efficient and reliable traffic management within the network architecture.

One key difference between DNS Load Balancing and Hardware Load Balancing lies in the response time. DNS Load Balancing may introduce latency as it relies on DNS resolution, whereas Hardware Load Balancing typically offers faster response times due to its direct traffic handling capabilities. This difference in response time can have a significant impact on the overall performance of the network services.

Another distinction is the level of control and flexibility provided by each approach. DNS Load Balancing can be easier to set up and manage, particularly in cases where dynamic server changes are frequent. Conversely, Hardware Load Balancing provides more granular control over traffic management and offers advanced features for handling complex network requirements.

Furthermore, Hardware Load Balancers are often preferred in scenarios where high availability and scalability are crucial. They can handle large traffic volumes, make intelligent routing decisions, and offer features such as SSL acceleration and content caching.

In contrast, DNS Load Balancing may struggle with these advanced capabilities, making it less suitable for complex network setups.

Per App Load Balancing

Per App Load Balancing offers numerous benefits in network architecture, including:

- The efficient allocation of resources

- Enhanced performance

- Improved user experience

Load balancing algorithms play a crucial role in distributing traffic, ensuring optimized distribution for each application.

Implementing per App Load Balancing provides:

- Granular control over traffic distribution

- Enhanced security and reliability

- Prevention of overload on specific applications.

Benefits of Load Balancing

What are the benefits of implementing per app load balancing in network architecture?

- Per app load balancing provides dedicated application services for scaling, accelerating, and securing applications.

- It ensures that each application receives the resources it needs for optimal performance.

- Per app load balancing allows for granular control and optimization of traffic for individual applications.

- It enables efficient resource allocation and utilization tailored to the specific requirements of each application.

- Per app load balancing enhances the overall user experience by dynamically managing the distribution of traffic based on individual application needs.

Load Balancing Algorithms

Load balancing algorithms in network architecture, specifically in the context of per app load balancing, play a crucial role in optimizing performance and resource allocation for individual applications. Per app load balancing tailors load balancing strategies to specific applications, ensuring that traffic distribution and server routing are customized to meet the unique needs of each application. By efficiently managing server availability and load, this algorithm enhances user experience by providing dedicated application services for scaling, accelerating, and securing individual applications. To illustrate the importance of load balancing algorithms in per app load balancing, consider the following table:

| Load Balancing Algorithm | Description | Benefits |

|---|---|---|

| Round Robin | Distributes requests equally among servers in a round-robin fashion | Ensures fair resource allocation |

| Least Connections | Routes traffic to the server with the fewest active connections | Improves response time |

| Source IP Hash | Routes requests based on the source IP address, ensuring consistency | Supports session persistence |

These load balancing algorithms, among others, enable network architects to optimize performance and resource utilization for individual applications, leading to improved user experience and overall network efficiency.

Implementing per App Load Balancing

Implementing per App Load Balancing involves utilizing modern Application Delivery Controllers (ADCs) to configure specific load balancing settings tailored to the unique requirements of individual applications. This approach allows for dedicated application services that can scale, accelerate, and secure applications effectively. Per App Load Balancing optimizes the use of application delivery resources for each application independently, making it particularly beneficial for complex applications with varying traffic patterns and resource demands.

Key points to consider when implementing per App Load Balancing:

- Load balancers distribute traffic across multiple servers, ensuring efficient utilization of resources.

- Routing decisions are based on various types of load balancing algorithms, such as round-robin, least connections, or weighted distribution.

- Per App Load Balancing uses reverse proxy techniques to distribute the network traffic.

- Hardware load balancers provide high-performance load balancing capabilities.

- Application services can be customized and configured based on the unique requirements of each application.

Weighted Load Balancing Vs Round Robin

Weighted Load Balancing and Round Robin are two commonly used methods for distributing network traffic among multiple servers in a network architecture. Load balancing plays a crucial role in ensuring efficient and reliable delivery of IP services.

Round Robin load balancing is a simple and widely adopted approach. In this method, the main load balancer distributes client requests evenly across the available servers in a cyclical manner. Each server receives an equal share of the traffic, regardless of its capacity or current load. This approach is easy to implement and works well in situations where all servers have similar capabilities. However, it may not be suitable for scenarios where servers have varying capacities or when there is a need to prioritize certain servers or applications.

On the other hand, Weighted Load Balancing allows for more fine-grained control over the distribution of traffic. It assigns a weight or priority to each server based on its capacity, performance, or other factors. The main load balancer takes these weights into account when distributing traffic, ensuring that servers with higher capacities handle a larger share of the load. This approach is especially useful in situations where servers have different capabilities or when certain applications require more resources than others. Weighted Load Balancing can be implemented using software load balancers or specialized hardware load balancers.

Load Balancer Health Check

One crucial aspect of network architecture is ensuring the reliability and efficiency of IP services, and this is achieved through load balancer health checks. Load balancer health checks are essential for maintaining a stable and optimized network infrastructure. These health checks continuously monitor the status of servers to identify any unhealthy instances. By regularly assessing the health of servers, load balancers can efficiently distribute incoming traffic, ensuring that requests are only sent to healthy instances.

To emphasize the importance of load balancer health checks, here are five key points:

- Automatic removal of unhealthy instances: Load balancers automatically remove unhealthy servers from the pool to prevent them from receiving traffic. This ensures that only healthy servers handle incoming requests, minimizing user disruptions.

- Continuous monitoring of server status: Health checks continuously monitor the performance of servers, including server response, resource usage, and other performance indicators. This enables load balancers to make informed decisions regarding traffic distribution.

- Enhanced system reliability: By regularly checking the health of servers, load balancers help maintain system reliability. If a server becomes unresponsive or experiences high resource utilization, the load balancer can redirect traffic to healthier instances, preventing potential service disruptions.

- Efficient resource utilization: Load balancer health checks enable optimal resource allocation by ensuring that servers are not overwhelmed with traffic. By evenly distributing the load, load balancers prevent any single server from becoming overloaded, thereby improving the overall performance and responsiveness of IP services.

- Scalability and flexibility: Load balancer health checks facilitate the scalability and flexibility of network architecture. As new instances are added or removed, the load balancer dynamically adjusts its routing decisions, ensuring that traffic is evenly distributed among the available healthy servers.

Stateful Vs Stateless Load Balancing

Load balancing plays a vital role in network architecture. An important aspect to consider is the choice between stateful and stateless load balancing.

A load balancer is a hardware or software device that acts as a reverse proxy, distributing network traffic across multiple servers. There are different types of load balancers, including hardware load balancers, which require dedicated hardware, and software load balancers, which can be deployed on standard servers.

Stateful load balancing, as the name suggests, keeps track of sessions and the state of connections. This means that requests from the same user are consistently directed to the same server, ensuring session persistence. Stateful load balancing is particularly beneficial for applications that require persistence, such as e-commerce shopping carts or online banking. However, it introduces overhead due to session management, which can impact scalability and fault-tolerance.

On the other hand, stateless load balancing treats each request independently and does not retain session information. This makes it simpler and more efficient for distributing individual requests without considering previous interactions. Stateless load balancing is well-suited for stateless protocols like HTTP, where each request is self-contained. It offers better scalability and fault-tolerance since it does not require storing and managing session information.

When deciding between stateful and stateless load balancing, it is essential to consider the specific requirements of the application. If session persistence is necessary, stateful load balancing is recommended. However, if scalability and fault-tolerance are critical, stateless load balancing is a more suitable choice. It is also worth noting that the load balancing algorithm used by the load balancer can further influence the performance and efficiency of the system.

Application Load Balancer

The Application Load Balancer (ALB) operates at Layer 7 of the OSI model, making routing decisions based on application-specific data and offering advanced features for modern applications. ALB is designed to distribute traffic across a server pool, ensuring that no single server becomes overwhelmed and causing traffic bottlenecks. ALB supports various routing methods to efficiently distribute traffic, such as path-based routing and host-based routing.

Here are five key features and benefits of the Application Load Balancer:

- Microservices and Container Support: ALB directs traffic to different targets, such as EC2 instances, containers, and IP addresses, making it ideal for microservices and container-based architectures. This enables efficient load balancing in dynamic and scalable environments.

- Content-Based Routing: ALB provides content-based routing, allowing you to route traffic to different services based on the content of the request. This feature provides flexibility in directing requests to specific services based on specific criteria.

- WebSocket and HTTP/2 Support**: ALB offers support for WebSocket and HTTP/2 protocols, enabling real-time communication and improving performance for modern applications. This support ensures a seamless experience for applications that require low-latency and high-throughput communication.

- Integrated Containerized Application Support: ALB integrates well with containerized applications, enabling you to manage traffic efficiently in dynamic container environments. It allows you to scale your applications and automatically distribute traffic to healthy containers.

- Enhanced Security**: ALB supports various security features, such as SSL/TLS termination, which offloads SSL decryption from the web server to the load balancer. This improves the performance of the web server and simplifies the management of SSL certificates.

Load Balancing Router

In network architecture, a load balancing router serves as a crucial component in distributing network or application traffic across multiple servers, ensuring optimal performance and reliability while efficiently managing the flow of information between servers and endpoint devices. By distributing traffic based on various algorithms, such as round-robin, least connection, weighted round-robin, and IP hash, the load balancing router can effectively distribute the workload among servers in a server pool or server farm.

To emphasize the importance and benefits of a load balancing router, the following table highlights key points:

| Benefits of Load Balancing Router |

|---|

| Prevents server overloads |

| Optimizes application delivery resources |

| Efficiently manages flow of information |

| Improves performance and availability |

Load balancing routers can be deployed either as physical hardware or virtualized instances. They can be deployed on-premises, in data centers, or in the public cloud, depending on the specific network architecture requirements. Continuous health checks are conducted on servers to ensure their availability and performance. If any server is detected as unhealthy, it is automatically removed from the server pool, preventing it from impacting the overall performance and reliability of the network.

The load balancing router plays a critical role in maintaining optimal performance and reliability in network architecture. It efficiently distributes traffic, ensuring that no single server is overwhelmed with requests, while maximizing the utilization of resources. By seamlessly managing the flow of information between servers and endpoint devices, the load balancing router helps to create a robust and efficient network infrastructure.

Adaptive Load Balancing

Adaptive load balancing employs a feedback mechanism to dynamically adjust the distribution of network traffic across links, ensuring efficient and real-time correction of imbalances. This technique continuously monitors network conditions to optimize traffic flow, making it highly effective in handling fluctuating workloads and varying network conditions. By guaranteeing optimal performance and responsiveness, adaptive load balancing enhances the user experience in various network architectures, including cloud computing and server pools.

Key points about adaptive load balancing include:

- Efficient traffic distribution: Adaptive load balancing ensures that network traffic is distributed evenly across multiple links, preventing any single link from becoming overloaded.

- Real-time correction: This technique monitors the network continuously and adjusts traffic distribution in real-time to correct any imbalances that may occur due to changing conditions.

- Optimization for varying workloads: Adaptive load balancing is particularly beneficial in scenarios where workloads fluctuate. It dynamically adapts the traffic distribution to handle peak periods and low-demand periods efficiently.

- Scalability: Adaptive load balancing allows networks to scale beyond the capacity of a single load balancer. By distributing traffic across multiple links, it enables networks to handle larger volumes of traffic without degradation in performance.

- Virtual load balancing: Adaptive load balancing can be implemented using virtual load balancers, which provide flexibility and scalability in cloud computing environments. Virtual load balancers can be easily deployed or scaled up or down as needed.

Frequently Asked Questions

What Is Load Balancer Architecture?

Load balancer architecture refers to the design and implementation of load balancing techniques in cloud computing. It involves the use of load balancers, which distribute network or application traffic across multiple servers to optimize performance and reliability.

Load balancers employ various algorithms, such as round robin, least connections, and IP Hash, to efficiently allocate resources among servers. They provide benefits such as scalability, high availability, and improved security in network architecture. Additionally, load balancers can be integrated with containerized environments and offer protection against DDoS attacks.

Configuration best practices ensure optimal performance.

What Does a Load Balancer Do in a Network?

A load balancer is a crucial component in network architecture that helps distribute incoming network or application traffic across multiple servers. It ensures optimal performance by evenly distributing the load and preventing overloads on individual servers. This improves application services and enhances scalability and high availability.

Load balancers use various algorithms to efficiently distribute traffic, such as least connection method and IP hash. Additionally, they offer advantages like improved security, off-loading DDoS attacks, and cost-effective protection.

Choosing the right load balancer involves considering key features, implementation best practices, and security considerations.

What Are the 3 Types of Load Balancers in Aws?

The three types of load balancers in AWS are:

- Classic Load Balancer: This load balancer is the oldest type and is designed to distribute traffic evenly across multiple instances. It operates at both the application and transport layers of the OSI model, making it suitable for a wide range of applications. Classic Load Balancer offers features such as SSL termination, session stickiness, and health checks.

- Application Load Balancer: This load balancer is specifically designed to route traffic to different targets based on the content of the request. It operates at the application layer of the OSI model and supports advanced load balancing features such as path-based routing, host-based routing, and support for HTTP/2 and WebSocket protocols.

- Network Load Balancer: This load balancer is designed to handle TCP, UDP, and TLS traffic and is ideal for high-performance applications that require ultra-low latency. Network Load Balancer operates at the transport layer of the OSI model and provides features such as static IP addresses, health checks at the transport layer, and support for Elastic IP addresses.

Each load balancer type offers a variety of features and capabilities to efficiently distribute network traffic across multiple servers or instances.

What Is LB in System Architecture?

A load balancer (LB) in system architecture is a device or software that distributes incoming network traffic across multiple servers to optimize resources, improve performance, and ensure fault tolerance.

It employs various load balancing algorithms to evenly distribute traffic, such as round-robin, least connections, and IP hash.

LBs can be implemented as physical or virtualized appliances, and they offer scalability to handle increasing traffic demands.

Additionally, load balancers provide security features, such as SSL termination, DDoS protection, and continuous health checks on servers, enhancing application performance and security.