Load balancers are a critical component in network security, providing a robust solution for distributing traffic and optimizing performance. With the increasing prevalence of cyber threats and the growing demand for seamless user experiences, organizations need to understand the role that load balancers play in safeguarding their networks.

This discussion aims to explore the various aspects of load balancing in network security, including its benefits, algorithms, functionality, and best practices.

By delving into this topic, we can uncover the intricacies of load balancers and their significance in ensuring the availability, scalability, and integrity of modern network infrastructures.

So, let's dive into the world of load balancers and unveil their secrets to bolstering network security.

Key Takeaways

- Load balancers play a crucial role in network security by distributing network or application traffic across multiple servers.

- Load balancing improves system reliability, high availability, and application performance by efficiently distributing traffic and enhancing response times.

- Load balancers provide redundancy and minimize downtime by preventing overloading of servers.

- Load balancing algorithms, such as round-robin and least connections, optimize application availability, scalability, security, and performance.

What Is a Load Balancer?

A load balancer is a device or software utilized to distribute network or application traffic across multiple servers, ensuring optimal performance and preventing overloading. Load balancing plays a crucial role in managing traffic efficiently and maintaining high availability for applications.

Load balancers can be physical appliances, virtual machines, or cloud-based services, providing flexibility and scalability to meet varying organizational needs.

Load balancers use various algorithms and techniques to distribute incoming requests among available servers. One commonly used algorithm is round-robin, where each server is assigned a turn to handle requests sequentially. Another algorithm, least connections, directs traffic to the server with the fewest active connections, ensuring an even distribution. Additionally, session persistence ensures that subsequent requests from the same client are directed to the same server, maintaining a consistent user experience.

There are different types of load balancing strategies suitable for different traffic patterns and application demands. Dynamic load balancing adapts to changing network conditions, adjusting the distribution of traffic based on server performance metrics. On the other hand, static load balancing involves predefined rules that evenly distribute traffic among servers, regardless of their current load.

F5 offers a comprehensive range of load balancing solutions, including hardware and software-based options, to cater to specific organizational needs. F5's load balancers provide advanced features such as SSL offloading, content caching, and application layer health checks, ensuring optimal performance and high availability for applications.

Benefits of Load Balancing

Load balancing offers several benefits for network security, including:

- The efficiency of distribution and enhanced performance. By distributing traffic evenly across multiple servers, load balancers ensure that resources are utilized efficiently, preventing any single server from becoming overloaded. This results in improved application performance and response times.

- Enhanced system reliability. Load balancing enhances system reliability by providing redundancy and high availability, minimizing downtime and ensuring a seamless user experience.

Efficiency of Distribution

Efficiency of distribution is a key benefit of load balancing. Load balancers effectively distribute traffic across multiple servers to ensure optimal performance and prevent overloading. This is achieved by intelligently routing requests to the most appropriate server based on factors such as server load, latency, and availability.

Load balancers play a crucial role in distributing traffic at the application layer. They ensure that each server receives a balanced load of traffic, avoiding bottlenecks and maximizing resource utilization. This enhances the overall efficiency and performance of a network, delivering a seamless experience to users.

Load balancing also extends to network security. By distributing traffic evenly across servers, load balancers make it more difficult for malicious actors to overwhelm a single server with a flood of requests. This helps maintain the security of the network.

Enhanced Performance

The implementation of load balancing leads to a multitude of benefits, specifically in terms of enhancing overall network performance.

Load balancers play a crucial role in ensuring consistent access to services, thus improving application availability.

With load balancing, applications can seamlessly handle increased traffic loads, resulting in improved scalability.

Additionally, load balancers contribute to enhanced application security by distributing and redirecting traffic, protecting against potential threats.

This optimization of traffic distribution also leads to optimized application performance, resulting in better user experience and response times.

Furthermore, load balancing increases efficiency and reduces downtime, further contributing to enhanced performance.

Load Balancing Algorithms

When it comes to load balancing algorithms, two commonly used ones are the Round Robin Algorithm and the Least Connections Algorithm.

The Round Robin Algorithm distributes network requests in a sequential manner across servers, ensuring each server receives an equal share of the load.

On the other hand, the Least Connections Algorithm directs traffic to the server with the fewest active connections, helping to distribute the load more evenly and prevent overloading of any single server.

These algorithms play a crucial role in optimizing application availability, scalability, security, and performance within network security.

Round Robin Algorithm

The Round Robin Algorithm is a load balancing method that sequentially distributes requests across servers, offering a simple and equitable distribution of traffic. This algorithm does not take into account the server's current load or capacity, making it a straightforward approach to distribute network traffic.

Some key points about the Round Robin Algorithm are:

- It is easy to implement and works well in environments with similar server capacities and performance.

- It may not be suitable for dynamic or heterogeneous server environments as it lacks the ability to consider server load or response time.

- Despite its limitations, the Round Robin Algorithm is widely used due to its simplicity and ease of implementation.

In network security, load balancers play a crucial role in distributing traffic efficiently. Both hardware and software load balancers can employ the Round Robin Algorithm for server selection. However, for more advanced load balancing needs, techniques like global server load balancing can offer better traffic distribution and improved network security.

Least Connections Algorithm

The Least Connections Algorithm is a load balancing method that selects the server with the fewest active connections for routing network traffic. Load balancers using this algorithm prioritize directing requests to servers with the lightest traffic load.

By sending new requests to the server with the fewest current connections, the algorithm ensures efficient distribution of traffic. This approach aims to evenly distribute requests among servers based on their current load, optimizing performance and preventing overloads.

Load balancing with the Least Connections Algorithm is particularly effective in maintaining server availability and responsiveness during fluctuating traffic loads. It is a crucial technique in network security, especially when dealing with distributed denial-of-service attacks.

Hardware load balancers often employ the Least Connections Algorithm as one of the load balancing algorithms for efficient traffic distribution and server selection based on server capacity.

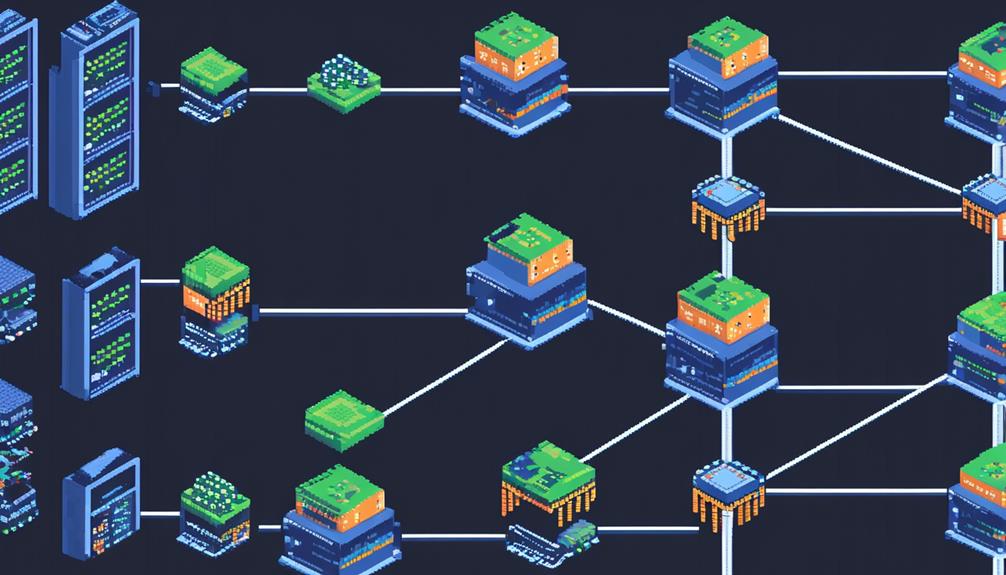

How Load Balancing Works

Load balancing is an essential mechanism for optimizing performance and availability by distributing user requests to backend servers. This process involves redirecting traffic to ensure seamless user experience, especially when servers go down. Load balancing technologies, such as hardware-based, software-based, or virtual load balancers, play a crucial role in managing traffic and preventing server overloads.

To efficiently distribute traffic across servers, various load balancing algorithms are employed. These algorithms determine how user requests are distributed, ensuring that each server receives a fair share of the load. Some commonly used load balancing algorithms include:

- Round Robin: This algorithm distributes user requests evenly across the backend servers in a cyclical manner. Each server receives a request in turn, ensuring that no single server is overwhelmed with traffic.

- Threshold: With this algorithm, a predefined threshold is set for each server. When the number of connections or the load on a server exceeds the threshold, the load balancer stops directing traffic to that server until it becomes available again.

- Least Connections: This algorithm directs user requests to the server with the fewest active connections. By distributing the load based on the number of active connections, this algorithm ensures that servers with lower loads receive more requests.

Load balancing is particularly important for web applications and data-intensive services where a single server may not be able to handle the incoming traffic. By effectively distributing the load, load balancers optimize performance, improve availability, and enhance security by preventing server overloads and ensuring continuous operation of IP-based services.

Examples of Load Balancing

To further explore load balancing in practice, let's examine various examples of its implementation in different scenarios. Load balancing is a crucial component of network security, ensuring efficient traffic distribution and high availability. There are different types of load balancers available, including hardware load balancers and software load balancers, each with its own advantages and use cases.

One example of load balancing is dynamic load balancing, which is used to handle surges and spikes in traffic. This type of load balancing dynamically distributes the incoming traffic across multiple servers, preventing any single server from becoming overwhelmed. It allows for scalability and ensures that the network can handle sudden increases in demand.

Static load balancing, on the other hand, is used for predictable traffic patterns. In this scenario, traffic is evenly distributed across multiple servers based on predefined rules. This approach is ideal for scenarios where the traffic is consistent and can be easily predicted.

Cloud-based load balancing is another example, commonly used in cloud computing environments. Cloud load balancers distribute incoming traffic across multiple virtual machines or containers, ensuring efficient resource utilization and high availability.

Internal load balancing is employed to balance traffic distribution across the internal infrastructure. It ensures that requests are evenly distributed among backend servers, optimizing performance and preventing any single server from being overloaded.

Network load balancers operate on Layer 4 of the OSI model, distributing traffic based on network addresses and ports. They provide high-performance load balancing and can handle large amounts of traffic efficiently.

Different Types of Load Balancers

When it comes to load balancers, there are several different types to consider. These include:

- Hardware load balancers: These offer fast throughput and better security. They are physical devices that are dedicated to balancing traffic and distributing it across servers.

- Software load balancers: These provide flexibility and cloud-based options. They are installed on servers and use software algorithms to distribute traffic.

- Virtual load balancers: These leverage cloud infrastructure for load balancing. They are virtual instances that can be deployed and managed in a cloud environment.

- Application delivery controller software: This type of load balancer combines load balancing with other features like caching, SSL offloading, and traffic management.

Each type has its own unique features and benefits. Understanding the different types of load balancers is crucial in order to choose the most suitable one for your network security needs.

Types of LBs

There are various types of load balancers that operate at different layers of the network stack to efficiently distribute network traffic.

These load balancers play a crucial role in network security by ensuring that servers can handle the incoming connections and spikes in traffic without being overwhelmed.

- Layer 4 load balancers: These operate at the transport layer and make routing decisions based on IP addresses and port numbers. They are effective in balancing traffic across servers based on network-level information.

- Layer 7 load balancers: These operate at the application layer and make routing decisions based on content within the data part of the network packet. They are capable of more sophisticated load balancing, taking into account factors such as the type of application or specific user requests.

- Hardware load balancers: These are standalone devices designed specifically for load balancing purposes. They are optimized for performance and reliability, making them suitable for high-demand environments.

Using these different types of load balancers, network administrators can effectively distribute traffic across servers, ensuring optimal performance and maintaining network security.

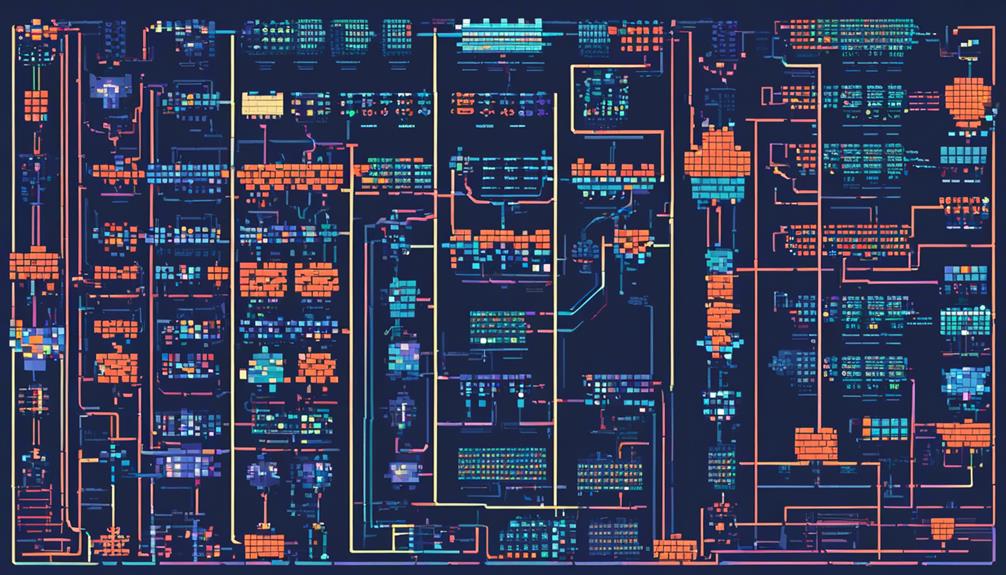

LB Algorithms

Load balancing algorithms play a critical role in the operation of different types of load balancers, ensuring efficient distribution of network traffic across servers while maintaining network security. There are several load balancing algorithms that are commonly used.

Round Robin is a simple algorithm where requests are distributed across servers sequentially. Threshold-based load balancing distributes requests based on a set threshold or limit. Random with two choices randomly selects two servers and sends the request to the one with fewer connections. Least connections sends new requests to the server with the fewest current connections. Least time sends requests to the server with the fastest response time and fewest active connections.

These algorithms are used in various load balancers, including hardware load balancers and DNS load balancers. By distributing traffic based on data such as connection count or response time, load balancing capabilities are maximized, leading to improved performance and enhanced network security.

LB Configuration

Different types of load balancers are utilized for load balancer configuration. These include hardware load balancers, software load balancers, virtual load balancers, and application delivery controller software. Each type offers unique features and benefits that cater to specific network security requirements.

Hardware load balancers utilize specialized processors for high throughput and security. They are ideal for handling heavy network traffic and providing robust protection against DDoS attacks.

Software load balancers offer flexibility, scalability, and cloud-based options. They are popular for their ability to optimize server resources and adapt to changing network demands.

Virtual load balancers are commonly used in cloud environments. They provide dynamic traffic distribution and network load balancing at different layers of the OSI model. They offer efficient resource utilization and improved scalability.

Cloud-Based Load Balancers

Cloud-based load balancers are highly efficient in distributing traffic across cloud computing environments and offer flexibility for load balancing based on HTTP Secure at Layer 7. These load balancers operate at Layer 4 of the OSI model and are designed to handle the increasing demands of modern network security requirements.

One of the key advantages of cloud-based load balancers is their ability to balance traffic distribution across internal infrastructure. They can dynamically add or drop servers based on traffic load, ensuring prompt responses even during heavy loads. This flexibility allows organizations to scale their network resources up or down as needed, optimizing performance and resource utilization.

Cloud-based load balancers are particularly popular in cloud environments due to their additional features and flexibility. They can seamlessly integrate with other cloud services, such as DNS and application delivery controllers, to provide a comprehensive network security solution. By utilizing load balancing algorithms, these balancers can intelligently distribute traffic based on various factors, such as server health, network latency, and application-specific requirements.

Compared to hardware load balancers, cloud-based load balancers offer several advantages. They eliminate the need for expensive hardware investments and complex configurations, as the load balancing functionality is provided as a service. Additionally, they can easily adapt to changing network environments and scale horizontally to handle increasing traffic demands.

Load Balancer Technology

Load balancer technology encompasses a range of solutions, including hardware, software, virtual, and application delivery controllers, that optimize server resource usage and distribute user requests efficiently. Load balancers play a crucial role in network security by ensuring the availability, scalability, security, and performance of applications.

Here are three key aspects of load balancer technology:

- Hardware Load Balancers: These are physical devices that sit between the client and the server. They efficiently distribute incoming traffic across multiple servers based on predefined algorithms. Hardware load balancers offer high performance and are capable of handling heavy traffic loads.

- Software Load Balancers: These load balancers operate as software applications running on standard servers. They provide similar functionality to hardware load balancers but can be easily deployed and scaled in virtualized environments. Software load balancers are cost-effective and offer flexibility in terms of configuration and management.

- Load Balancing Algorithms: Load balancers use various algorithms to distribute traffic among servers. Round robin is a simple algorithm that evenly distributes requests to each server. Least connections assigns requests to the server with the fewest active connections. Random with two choices randomly selects between two servers to distribute traffic. These algorithms ensure efficient traffic distribution and help optimize server load.

Load balancer technology is crucial in protecting against distributed denial-of-service (DDoS) attacks. By distributing incoming traffic across multiple servers, load balancers can prevent overwhelming any single server and ensure the availability of the network. They can also redirect traffic in case of server failure, further enhancing network security.

How F5 Can Help

F5 offers a comprehensive suite of load balancing solutions that address specific organizational needs and provide a range of benefits to optimize application performance, enhance network security, and improve operational efficiency.

F5's load balancers distribute client requests across multiple servers to ensure efficient utilization of computing capacity and eliminate single points of failure.

F5 provides both hardware load balancers and software-based load balancing solutions. Hardware load balancers, such as the BIG-IP application services, are designed to handle high volumes of traffic and improve response time by making routing decisions based on factors like server load, response time, and the fewest current connections. These load balancers operate at the OSI layer 4 and can distribute traffic based on IP address, port numbers, and protocols.

F5 also offers distributed cloud DNS load balancers, which help distribute client requests across multiple server locations to improve availability and reduce response time. These load balancers operate at the OSI layer 7 and can intelligently route traffic based on factors like geographic location, network latency, and server load.

One of the key benefits of using F5's load balancing solutions is their insight capabilities. F5 load balancers provide detailed analytics and reporting, allowing organizations to gain visibility into their network traffic and application performance. This insight helps organizations identify potential bottlenecks, optimize resource allocation, and make informed decisions to improve their network security.

In addition, F5 load balancers support the creation and operation of adaptive applications, which can dynamically scale resources based on demand. This flexibility ensures that applications can handle fluctuations in traffic and maintain high performance levels.

Load Balancing and Network Security

Load balancing plays a crucial role in enhancing network security by optimizing application availability, scalability, and performance. By distributing traffic across multiple servers, load balancers ensure that no single server is overwhelmed, reducing the risk of downtime due to server capacity constraints. This traffic distribution also helps prevent network congestion, ensuring that applications are accessible to users even during peak periods.

Load balancing algorithms: Load balancers use various algorithms to distribute traffic, such as round robin, threshold, random with two choices, least connections, and least time. These algorithms help balance the workload across servers, ensuring efficient utilization of resources.

Application delivery controllers: Load balancers, often referred to as application delivery controllers, play a critical role in network security. They act as intermediaries between clients and servers, directing traffic based on predefined rules. This allows load balancers to perform tasks such as SSL offloading, which reduces the processing burden on servers and improves security.

Hardware load balancers: Load balancers can be implemented in hardware or software. Hardware load balancers are dedicated devices designed specifically for load balancing and traffic routing. They offer high-performance capabilities and advanced security features, making them ideal for large-scale deployments.

In addition to optimizing traffic distribution, load balancers also contribute to network security by providing features such as DNS-based load balancing and traffic routing based on Layer 7 (application layer) information. These capabilities enable load balancers to intelligently route traffic to the most appropriate server based on factors such as server health, geographic location, and application-specific requirements. By effectively managing traffic and ensuring the availability and scalability of applications, load balancers play a vital role in network security.

Load Balancer Placement in Network Security

Placing load balancers strategically in network security architecture is essential for ensuring secure and efficient traffic distribution. Load balancers play a crucial role in distributing traffic across multiple servers, optimizing response time, and minimizing single points of failure. By intelligently managing network traffic, load balancers can enhance the overall performance and reliability of a system.

One key aspect of load balancer placement is the decision of whether to position them behind a firewall. Placing load balancers behind a firewall provides an added layer of protection, shielding web servers from direct attacks and reducing the risk of exposing vulnerable load balancers to the internet. This configuration also allows for more control over access from internal and external networks, enhancing the overall security posture of the system.

Additionally, load balancers can be used as firewalls themselves, eliminating the need for a separate firewall infrastructure. This consolidated approach offers a secure system, especially when combined with web application firewall functionality. However, it is important to note that load balancers and firewalls can become single points of failure. To mitigate this risk, redundant equipment and active/passive high availability (HA) clusters should be implemented to ensure a fully redundant system configuration.

Moreover, load balancers can make routing decisions based on various factors, such as response time or the fewest active connections, to distribute traffic effectively. By intelligently redirecting traffic to the most suitable server, load balancers optimize resource utilization and prevent any one server from being overwhelmed.

Load Balancer Best Practices in Network Security

Positioning load balancers strategically in network security architecture is crucial to ensure optimal traffic distribution and enhance overall system performance and reliability. Load balancers play a critical role in network security by efficiently distributing incoming traffic across multiple servers, mitigating the risk of single points of failure, and improving the availability and scalability of applications.

Here are some best practices for implementing load balancers in network security:

- Choose the right type of load balancer: There are different types of load balancers available, including hardware load balancers, software load balancers, and application delivery controllers. Consider factors such as scalability, performance, and security requirements when selecting the appropriate load balancer for your network.

- Implement redundancy: To ensure high availability and reliability, it is essential to deploy load balancers in a redundant configuration. This means having multiple load balancers in an active-active or active-passive setup, so that if one load balancer fails, the others can seamlessly take over the traffic distribution.

- Utilize DNS load balancing: DNS load balancing is a technique that uses the Domain Name System (DNS) to distribute traffic across multiple servers. By configuring multiple IP addresses for a single domain name, DNS load balancing can distribute traffic based on factors such as geographical location, server health, or round-robin.

By following these best practices, network administrators can optimize the performance and security of their applications, ensuring efficient traffic distribution and minimizing the risk of downtime.

Load balancers, when strategically positioned in the network security architecture, provide a reliable and scalable solution for managing the ever-increasing demands of modern applications.

Sources:

- https://www.nginx.com/resources/glossary/load-balancing/

- https://www.f5.com/services/resources/glossary/load-balancer

Frequently Asked Questions

What Is the Purpose of a Load Balancer?

The purpose of a load balancer is to evenly distribute network traffic across multiple servers to ensure optimal performance and availability of applications.

Load balancing algorithms are used to determine how traffic is distributed, taking into account factors such as server capacity and response times.

Load balancers also provide scalability by allowing additional servers to be added or removed as needed.

Configuring load balancers involves considering best practices, such as ensuring redundancy and high availability, and integrating them with other network security tools for enhanced protection.

Monitoring and troubleshooting load balancers is crucial to identify and address any issues that may impact network performance.

What Is the Purpose of a Network Load Balancer?

The purpose of a network load balancer is to evenly distribute incoming traffic among multiple servers, optimizing resource usage and preventing server overload. By redirecting traffic to healthy servers, load balancers help maintain application availability and ensure continuous access to web services.

They improve application scalability by efficiently managing traffic and dynamically adjusting server resources. Load balancers also enhance application performance by reducing latency, optimizing server response times, and efficiently distributing client requests.

Additionally, they contribute to application security by offloading DDoS attacks and providing SSL termination and encryption for sensitive data.

What Are the Three Types of Load Balancers?

Load balancers are essential components in network infrastructure that distribute incoming network traffic across multiple servers to optimize performance and ensure high availability.

There are three main types of load balancers: hardware load balancers, software load balancers, and virtual load balancers. Each type offers different load balancing techniques, scalability options, deployment strategies, and configuration best practices.

Additionally, load balancers in cloud environments leverage virtualization and incorporate failover and high availability mechanisms for improved performance and reliability.

What Are the Security Issues With Load Balancers?

Load balancers are an essential component of network security, but they also present potential vulnerabilities and security issues. Common load balancer attacks include Distributed Denial of Service (DDoS) attacks, which can overwhelm the load balancer and disrupt service.

Security best practices for load balancers include securing their configurations, implementing authentication mechanisms, and addressing encryption concerns. Additionally, logging and monitoring load balancer activity can help detect and prevent security breaches.

In cloud environments, load balancers should be protected against DDoS attacks and take advantage of additional security features offered by the cloud provider.